Data Transfer with Venadi

March 10, 2017 | Data Transfer HPCvenadi.ucsc.edu (IPv4 address: 128.114.109.74) is a Data Transfer Node, kindly provided by Pacific Research Platform (PRP), in order to improve the data transfer performance of the Hyades cluster. The system had been configured and tuned by John Graham at UCSD, before being shipped to UCSC.

Hardware Specifications

Venadi is a FIONA box. FIONA stands for Flash I/O Network Appliance. Designed by Phil Papadopoulos and Tom DeFanti at UCSD, FIONA is a low-cost, flash memory-based data server appliance that can handle huge data flows. Here are the hardware specs of Venadi:

1) Chassis: Supermicro 3U Chassis SC836BE1C-R1K03B, with the following key features:

- 3U chassis - supports for maximum motherboard sizes: 13.68” x 13” E-ATX and ATX

- 16 x 3.5” hot-swap SAS/SATA drive bay with SES2, optional 2 x 2.5” hot-swap drive bay

- 16-port 3U SAS3 12Gbps single-expander backplane, support up to 16x 3.5-inch SAS3/SATA3 HDD/SSD

- 1U 800/1000W Redundant Titanium Single Output Power Supply W/PMbus

- 7 full-height & full-length expansion slot(s)

- 3 x 8cm hot-swap redundant PWM cooling fans

- 2 x 8cm hot-swap exhaust fans & air shroud

2) Motherboard: Supermicro X10SRL-F, with the following key features:

- Single socket R3 (LGA 2011) supports Intel Xeon processor E5-2600 v4/ v3 and E5-1600 v4/ v3 family

- Intel C612 chipset

- Up to 1TB ECC 3DS LRDIMM, up to DDR4- 2400MHz; 8x DIMM slots

- 7x PCI-E slots total:

- 2 PCI-E 3.0 x8,

- 2 PCI-E 3.0 x8 (in x16),

- 2 PCI-E 3.0 x4 (in x8) or 1 x8 + 1 x0 (auto-switch)

- 1 PCI-E 2.0 x4 (in x8)

- Intel i210 Dual port GbE LAN

- 10x SATA3 (6Gbps) via C612

- 1x VGA, 2x COM, 1x TPM

- 4x USB 3.0 ports, 8x USB 2.0 ports

- 2x SuperDOM with built-in power

3) CPU

4) Memory

- 128 GB (8 x 16GB PC4-19200 CL17 Registered ECC DDR4-2400 1.2V)

5) SAS HBA

- LSI 9300-16i PCIe 3.0 SAS 12Gb/s SAS Host Bus Adapter (PCIe x8), with 4 x Supermicro Internal MiniSAS HD SFF-8643 50cm Cables (CBL-SAST-0532)

6) SSDs

- 2x Intel 535 Series 2.5” 240GB SATA III MLC SSDs, attached to onboard SATA ports

- 16x Intel 535 Series 2.5” 480GB SATA III MLC SSDs, attached to the LSI 9300-16i SAS HBA

7) Network Adapters

- 40GbE NIC: Mellanox ConnectX-3 Pro EN Single-Port 40/56 Gigabit Ethernet Adapter Card - Part ID: MCX313A-BCCT (PCIe x8)

- IB Adapter: Mellanox ConnectX-3 VPI InfiniBand Adapter Card, Single-Port, QSFP QDR IB (40Gb/s) and 10GbE - Part ID: MCX353A-QCBT (PCIe x8)

Storage

Boot Drives

The two (2x) Intel 240GB SSDs are attached to onboard SATA3 (6Gbps) ports; and serve as boot drives. Two software RAID1 (mirror) volumes, md126 & md127, respectively, are created on the 2 SSDs; they are mounted at / & /boot, respectively.

# cat /proc/mdstat

Personalities : [raid1]

md126 : active raid1 sdq2[0] sdr2[1]

233248768 blocks super 1.2 [2/2] [UU]

bitmap: 0/2 pages [0KB], 65536KB chunk

md127 : active raid1 sdq1[0] sdr1[1]

1049536 blocks super 1.0 [2/2] [UU]

bitmap: 0/1 pages [0KB], 65536KB chunk

# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/md126 223G 2.6G 220G 2% /

/dev/md127 1015M 165M 850M 17% /bootZFS

ZFS on Linux 0.6.5.8 is installed on Venadi. The following script was used to create a ZFS on the 16x Intel 480GB SSDs:

zpool create -f -m /bigdata bigdata -o ashift=12 raidz1 /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR61420020480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614200AK480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614200B3480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614200RV480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614200Z9480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614200ZZ480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203A1480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203A5480EGN

zpool add -f bigdata -o ashift=12 raidz1 /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203AB480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203B2480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203B7480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203BB480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203BC480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203BH480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203WB480EGN /dev/disk/by-id/ata-INTEL_SSDSC2BW480H6_CVTR614203Y3480EGN

zfs set recordsize=1024K bigdata

zfs set checksum=off bigdata

zfs set atime=off bigdataSo there are 2 raidz1 VDEVs (virtual devices), each on 8 SSDs; the zpool bigdata stripes the 2 raidz1 VDEVs. The ZFS has a usable capacity of 5.7TB:

# df -h /bigdata

Filesystem Size Used Avail Use% Mounted on

bigdata 5.7T 0 5.7T 0% /bigdataStorage IO Benchmarks

dd

We start with the humble dd:

$ dd if=/dev/zero of=/home/dong/10GB bs=1M count=10240

10240+0 records in

10240+0 records out

10737418240 bytes (11 GB) copied, 3.46705 s, 3.1 GB/s

$ dd if=/dev/zero of=/bigdata/dong/10GB bs=1M count=10240

10240+0 records in

10240+0 records out

10737418240 bytes (11 GB) copied, 2.21714 s, 4.8 GB/sThe number here are a bit off the mark:

- The default behavior of dd is to not sync. The above command will just commit 10 GB of data into a RAM buffer (write cache) and exit

- The inflated write speed to the software RAID1 volume was 3.1 GB/s, which is way higher than the rated speed of SATA 3 (6Gb/s = 0.75 GB/s)

- The inflated write speed to the ZFS volume (/bigdata) was 4.8 GB/s

- We get the same results even after we drop caches

Next we run dd with the option conv=fdatasync, which will cause dd to physically write output file data before finishing:

$ rm -f /home/dong/10GB /bigdata/dong/10GB

$ dd if=/dev/zero of=/home/dong/10GB bs=1M count=10240 conv=fdatasync

10240+0 records in

10240+0 records out

10737418240 bytes (11 GB) copied, 92.9101 s, 116 MB/s

$ dd if=/dev/zero of=/bigdata/dong/10GB bs=1M count=10240 conv=fdatasync

10240+0 records in

10240+0 records out

10737418240 bytes (11 GB) copied, 2.58296 s, 4.2 GB/sThe write speed to the software RAID1 volume dropped to appallingly low 116 MB/s; while that to ZFS was only slightly lower at 4.2 GB/s

We also run dd with the option oflag=dsync, which will cause dd to use synchronized I/O for data:

$ rm -f /home/dong/10GB /bigdata/dong/10GB

$ dd if=/dev/zero of=/home/dong/10GB bs=1M count=10240 oflag=dsync

10240+0 records in

10240+0 records out

10737418240 bytes (11 GB) copied, 154.844 s, 69.3 MB/s

$ dd if=/dev/zero of=/bigdata/dong/10GB bs=1M count=10240 oflag=dsync

10240+0 records in

10240+0 records out

10737418240 bytes (11 GB) copied, 75.3956 s, 142 MB/sNow both numbers are abysmal: 69.3 MB/s for software RAID1 and 142 MB/s for ZFS. However, the numbers may be artificially too low, because in this mode dd syncs every megabyte (bs).

Raising bs to 1GB may give a slightly more accurate number:

# echo 3 > /proc/sys/vm/drop_caches

$ rm -f /home/dong/10GB /bigdata/dong/10GB

$ dd if=/dev/zero of=/home/dong/10GB bs=1G count=10 oflag=dsync

10+0 records in

10+0 records out

10737418240 bytes (11 GB) copied, 97.3427 s, 110 MB/s

$ dd if=/dev/zero of=/bigdata/dong/10GB bs=1G count=10 oflag=dsync

10+0 records in

10+0 records out

10737418240 bytes (11 GB) copied, 4.5812 s, 2.3 GB/sbonnie++

We next move on to more sophisticated file system benchmarking tools. Bonnie++ is a benchmark suite that is aimed at performing a number of simple tests of hard drive and file system performance.

$ bonnie++ -d /bigdata/dong -m venadi -q > venadi.csv

$ cat venadi.csv | bon_csv2htmlHere are the results:

| Version 1.97 | Sequential Output | Sequential Input | Random Seeks |

Sequential Create | Random Create | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| venadi | Size | Per Char | Block | Rewrite | Per Char | Block | Num Files | Create | Read | Delete | Create | Read | Delete | |||||||||||||

| K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | K/sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | /sec | % CPU | |||

| 252G | 243 | 99 | 1476574 | 98 | 1133554 | 96 | 742 | 99 | 3530242 | 95 | 4029 | 107 | 16 | +++++ | +++ | +++++ | +++ | +++++ | +++ | +++++ | +++ | +++++ | +++ | +++++ | +++ | |

| Latency | 34541us | 2879us | 25738us | 15429us | 171ms | 353ms | Latency | 12814us | 220us | 240us | 25760us | 5us | 58us | |||||||||||||

A few quick notes:

- Be default, bonnie++ uses datasets whose sizes are twice the amount of memory, in order to minimize the effect of file caching. The total memory of venadi is 128 GB.

- Block sequential write speed is 1476574 KB/s = 1.48 GB/s.

- Block sequential rewrite speed is 1133554 KB/s = 1.13 GB/s.

- Block sequential read speed is 3.53 GB/s.

- The ZFS volume delivers 4029 IOPS (Random Seeks in the table). By comparison, a 10,000 SAS drive has ~140 IOPS.

IOzone

IOzone is another popular filesystem benchmark tool. The benchmark generates and measures a variety of file operations. It tests file I/O performance for the following operations: Read, write, re-read, re-write, read backwards, read strided, fread, fwrite, random read, pread, mmap, aio_read, aio_writeiozone.

By default, IOzone will automatically create temporary files of size from 64k to 512M, to perform various testing; and will generate a lot of data. Here we fix the test file size to 256 GB, twice the amount of memory. For the sake of time and space, we only test write and read speed:

$ cd /bigdata/dong

$ iozone -i 0 -i 1 -s 256g

File size set to 268435456 kB

Command line used: iozone -i 0 -i 1 -s 256g

Output is in kBytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 kBytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

random random bkwd record stride

kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread

268435456 4 869842 504431 2281246 2247948 Note the write speed was only 0.87 GB/s, and rewrite speed was even slower at 0.50 GB/s!

Let’s do a full test with fixed file size of 8 GB:

$ iozone -a -s 8g

Auto Mode

File size set to 8388608 kB

Command line used: iozone -a -s 8g

Output is in kBytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 kBytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

random random bkwd record stride

kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread

8388608 4 850821 879929 2400110 2395613 714628 65984 2214287 933579 2065772 862197 862430 2390387 2392090

8388608 8 1438496 1591588 4057049 4050153 1760470 131769 3551387 1813906 3378418 1540490 1538936 4046626 4056830

8388608 16 2180223 2633935 5677680 5677227 3850433 262065 4627046 3345919 4999957 2525735 2362514 5679179 5717538

8388608 32 2976787 4191447 7708850 7742646 6037254 531045 6636904 5827137 6588498 3779566 3525765 7786818 7782960

8388608 64 3722032 5910142 9056105 9124727 7782426 1106885 8889147 9571348 7594422 5042178 5016261 9043561 9087767

8388608 128 4268489 6764827 9361823 9412615 8407263 1971987 9125877 12766264 8405367 5570094 5756065 9278251 9337346

8388608 256 4343510 7587717 8863451 8896312 8547061 3468020 8847286 12647673 8444927 5934968 6161525 8912379 8939046

8388608 512 4389468 8111871 9233827 9344233 9208122 5328749 9233582 14076193 9187597 6558340 6311319 9311508 9331324

8388608 1024 6648581 7168058 9373498 9513018 9476789 8401504 9402003 15393947 9524501 5471139 5706443 9567712 9574768

8388608 2048 6950542 7381517 9553419 9589962 9572705 8233683 9426133 15502968 9552895 5145770 4953441 9099378 9513257

8388608 4096 6419954 6824912 9302082 9563546 9557784 7672088 9457994 14973138 9542811 4916278 4861478 9460746 9520295

8388608 8192 5060173 6015892 8648878 8943288 8949806 6065735 8436815 9771784 8909434 4404698 4206570 8682088 8943670

8388608 16384 4651332 4949372 6970853 6947932 6960327 5070153 7027752 6273450 6916203 3881253 4087340 7052799 7018022Here, most likely caching distorts IOZone results for smaller files! The write speed reached a maximum of 6.96 GB/s, and read speed 9.55 GB/s, when record length (reclen) was 2048 KiB.

iperf3

Before we perform disk-to-disk data transfer tests between venadi and NERSC systems, it is worthwhile to measure the memory-to-memory performance of the network. We’ll test against the perfSONAR host at NERSC (perfsonar.nersc.gov).

Here is the route to perfsonar.nersc.gov:

$ tracepath perfsonar.nersc.gov

1?: [LOCALHOST] pmtu 9000

1: gateway 0.297ms

1: gateway 0.286ms

2: svl-hpr2--ucsc-100ge.cenic.net 1.498ms

3: hpr-esnet--svl-hpr2-100ge.cenic.net 1.564ms

4: sunn-cr5-br1.nersc.gov 3.319ms

5: br1-cr1.nersc.gov 2.985ms

6: perfsonar.nersc.gov 3.122ms reached

Resume: pmtu 9000 hops 6 back 6Let’s perform a simple iperf3 test:

$ bwctl -T iperf3 -f m -t 10 -i 1 -c perfsonar.nersc.gov

bwctl: Using tool: iperf3

bwctl: 15 seconds until test results available

SENDER START

Connecting to host 128.55.199.18, port 5437

[ 15] local 128.114.109.74 port 42792 connected to 128.55.199.18 port 5437

[ ID] Interval Transfer Bandwidth Retr Cwnd

[ 15] 0.00-1.00 sec 3.81 GBytes 32721 Mbits/sec 0 14.0 MBytes

[ 15] 1.00-2.00 sec 4.40 GBytes 37831 Mbits/sec 0 14.7 MBytes

[ 15] 2.00-3.00 sec 4.42 GBytes 37943 Mbits/sec 0 14.7 MBytes

[ 15] 3.00-4.00 sec 4.44 GBytes 38122 Mbits/sec 0 14.7 MBytes

[ 15] 4.00-5.00 sec 4.40 GBytes 37802 Mbits/sec 0 14.7 MBytes

[ 15] 5.00-6.00 sec 4.40 GBytes 37823 Mbits/sec 0 14.7 MBytes

[ 15] 6.00-7.00 sec 4.41 GBytes 37867 Mbits/sec 0 14.7 MBytes

[ 15] 7.00-8.00 sec 4.40 GBytes 37778 Mbits/sec 0 14.7 MBytes

[ 15] 8.00-9.00 sec 4.40 GBytes 37800 Mbits/sec 0 14.7 MBytes

[ 15] 9.00-10.00 sec 4.41 GBytes 37843 Mbits/sec 0 14.7 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bandwidth Retr

[ 15] 0.00-10.00 sec 43.5 GBytes 37353 Mbits/sec 0 sender

[ 15] 0.00-10.00 sec 43.5 GBytes 37333 Mbits/sec receiver

iperf Done.

SENDER ENDFantastic! We can almost reach the line rate of 40GbE!

Data Transfer with NERSC

NERSC generally recommends transferring data to and from NERSC using Globus Online. They also support the following tools:

- SCP/SFTP: for smaller files (<1GB).

- BaBar Copy (bbcp): for large files

- GridFTP: for large files

Let’s create an 100GB file with random data in my scratch directory on Cori:

$ ssh cori.nersc.gov

shawdong@cori12:~> cd $SCRATCH

shawdong@cori12:/global/cscratch1/sd/shawdong> ls

shawdong@cori12:/global/cscratch1/sd/shawdong> dd if=/dev/urandom of=100GB.dat bs=1M count=102400

102400+0 records in

102400+0 records out

107374182400 bytes (107 GB) copied, 7342.39 s, 14.6 MB/sCori’s scratch file system is also mounted on the NERSC Data Transfer Nodes (DTNs):

$ ssh dtn03.nersc.gov

-bash-4.1$ $PS1='[\u@\h \w]\$ '

[shawdong@dtn03 ~]$ cd /global/cscratch1/sd/shawdong

[shawdong@dtn03 /global/cscratch1/sd/shawdong]$ ls -lh

total 101G

-rw-r----- 1 shawdong shawdong 100G Mar 7 15:17 100GB.datThere are 4 DTNs at NERSC:

- dtn01.nersc.gov

- dtn02.nersc.gov

- dtn03.nersc.gov

- dtn04.nersc.gov

Each DTN has four 10GbE links (bonded as bond0) for transfers over the network and two FDR IB connections to the filesystem:

[shawdong@dtn03 ~]$ cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: load balancing (xor)

Transmit Hash Policy: layer3+4 (1)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 0

Down Delay (ms): 0

Slave Interface: eth4

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: f4:52:14:85:df:42

Slave queue ID: 0

Slave Interface: eth6

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: f4:52:14:86:02:91

Slave queue ID: 0

Slave Interface: eth5

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: f4:52:14:86:03:52

Slave queue ID: 0

Slave Interface: eth7

MII Status: up

Speed: 10000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: f4:52:14:86:02:92

Slave queue ID: 0

[shawdong@dtn03 ~]$ ifconfig bond0.205

bond0.205 Link encap:Ethernet HWaddr F4:52:14:85:DF:42

inet addr:128.55.205.20 Bcast:128.55.205.255 Mask:255.255.255.0

inet6 addr: fe80::f652:14ff:fe85:df42/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:9000 Metric:1

RX packets:4141573997 errors:0 dropped:0 overruns:0 frame:0

TX packets:1839278967 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:19190749116620 (17.4 TiB) TX bytes:15318105800596 (13.9 TiB)Here is the route from venadi to dtn03.nersc.gov:

$ tracepath dtn03.nersc.gov

1?: [LOCALHOST] pmtu 9000

1: gateway 0.588ms

1: gateway 0.272ms

2: svl-hpr2--ucsc-100ge.cenic.net 1.490ms

3: hpr-esnet--svl-hpr2-100ge.cenic.net 1.507ms

4: sunn-cr5-br1.nersc.gov 3.354ms

5: br1-cr2.nersc.gov 3.096ms

6: dtn03.nersc.gov 3.021ms reached

Resume: pmtu 9000 hops 6 back 6scp/sftp

Although not recommended, let’s see how long it takes to download the 100GB data file from NERSC to venadi, via scp/sftp:

[dong@venadi ~]$ cd /bigdata/dong/

[dong@venadi dong]$ scp shawdong@dtn04.nersc.gov:/global/cscratch1/sd/shawdong/100GB.dat .

100GB.dat 100% 100GB 182.2MB/s 09:22So we got on average 182.2MB/s (1.46 Gbps) via scp/sftp.

BaBar Copy (bbcp)

Next let’s see how long it takes to download the 100GB data file from NERSC to venadi, via bbcp:

$ bbcp -z -P 10 -S "ssh -x -a -oFallBackToRsh=no %I -l %U %H /usr/common/usg/bin/bbcp" \

shawdong@dtn02.nersc.gov::/global/cscratch1/sd/shawdong/100GB.dat \

/bigdata/dong/100GB-bbcp.dat

bbcp: Warning: venadi.ucsc.edu is running a newer version of bbcp

bbcp: Creating /bigdata/dong/100GB-bbcp.dat

bbcp: 170307 15:40:36 3% done; 425.7 MB/s

bbcp: 170307 15:40:45 7% done; 416.3 MB/s

bbcp: 170307 15:40:54 11% done; 426.8 MB/s

bbcp: 170307 15:41:03 15% done; 429.5 MB/s

bbcp: 170307 15:41:12 18% done; 426.6 MB/s

bbcp: 170307 15:41:21 22% done; 433.1 MB/s

bbcp: 170307 15:41:30 27% done; 438.4 MB/s

bbcp: 170307 15:41:39 31% done; 441.7 MB/s

bbcp: 170307 15:41:48 35% done; 442.3 MB/s

bbcp: 170307 15:41:57 38% done; 442.1 MB/s

bbcp: 170307 15:42:06 42% done; 441.7 MB/s

bbcp: 170307 15:42:15 46% done; 438.0 MB/s

bbcp: 170307 15:42:24 49% done; 433.9 MB/s

bbcp: 170307 15:42:33 53% done; 434.6 MB/s

bbcp: 170307 15:42:42 57% done; 437.9 MB/s

bbcp: 170307 15:42:51 61% done; 440.0 MB/s

bbcp: 170307 15:43:00 66% done; 442.5 MB/s

bbcp: 170307 15:43:09 69% done; 441.5 MB/s

bbcp: 170307 15:43:18 73% done; 442.0 MB/s

bbcp: 170307 15:43:27 77% done; 441.8 MB/s

bbcp: 170307 15:43:36 81% done; 442.2 MB/s

bbcp: 170307 15:43:45 85% done; 443.6 MB/s

bbcp: 170307 15:43:54 90% done; 445.2 MB/s

bbcp: 170307 15:44:03 94% done; 445.9 MB/s

bbcp: 170307 15:44:12 98% done; 446.1 MB/sSo we got about 440 MB/s (3.52 Gbps) via bbcp, about 2.4 times the transfer speed via scp/sftp.

My 100GB data file is stored at /global/cscratch1/sd/shawdong/, which is on Cori's Lustre file system. Perhaps the lackluster transfer speed is due to the poor performance of the Lustre file system? It could be too busy; or it may not be fully optimized, since Cori is new.

Let’s test against Eli Dart’s dataset, which is stored on GPFS:

[shawdong@dtn01 ~]$ cd /global/project/projectdirs/mpccc/dart/test-data

[shawdong@dtn01 test-data]$du -sh *

9.4G 10G.dat

47G 50G.dat

228G Climate-Large

229G Climate-MediumFirst let’s download a single 50GB file:

[dong@venadi dong]$ bbcp -z -P 2 -S "ssh -x -a -oFallBackToRsh=no %I -l %U %H /usr/common/usg/bin/bbcp" shawdong@dtn02.nersc.gov::/global/project/projectdirs/mpccc/dart/test-data/50G.dat /bigdata/dong/50G-bbcp.dat

bbcp: Creating /bigdata/dong/50G-bbcp.dat

bbcp: 170310 10:00:45 3% done; 842.5 MB/s

bbcp: 170310 10:00:47 7% done; 906.9 MB/s

...

bbcp: 170310 10:01:29 96% done; 994.4 MB/s

bbcp: 170310 10:01:30 98% done; 994.4 MB/sGreat! We were getting close to 1GB/s!

Next, let’s download the whole dataset:

[dong@venadi dong]$ bbcp -z -r -P 10 -s 16 -S "ssh -x -a -oFallBackToRsh=no %I -l %U %H /usr/common/usg/bin/bbcp" shawdong@dtn02.nersc.gov::/global/project/projectdirs/mpccc/dart/test-data/ /bigdata/dong/

bbcp: Indexing files to be copied...

bbcp: Copying 130 files and 0 links in 3 directories.

bbcp: Creating /bigdata/dong//10G.dat

bbcp: Creating /bigdata/dong//50G.dat

bbcp: 170310 09:41:50 20% done; 1.0 GB/s

...

bbcp: Creating /bigdata/dong//Climate-Large/va_6hrLev_IPSL-CM5A-LR_rcp85_r3i1p1_202601010300-203512312100.nc

bbcp: 170310 09:49:50 48% done; 1.1 GB/s

bbcp: 170310 09:49:59 94% done; 1.0 GB/sSweet! We got a consistent 1GB/s and up when downloading the 520GB dataset!

GridFTP

Let’s see how long it takes to download the 100GB data file from NERSC to venadi, via GridFTP:

[dong@venadi dong]$ export MYPROXY_SERVER_DN="/DC=org/DC=opensciencegrid/O=Open Science Grid/OU=Services/CN=nerscca3.nersc.gov"

[dong@venadi dong]$ myproxy-logon -b -T -l shawdong -s nerscca.nersc.gov

Enter MyProxy pass phrase:

A credential has been received for user shawdong in /tmp/x509up_u1001.

Trust roots have been installed in /home/dong/.globus/certificates/.However, at this moment, the NERSC DTNs were having some issue with ID mapping:

$ globus-url-copy -list gsiftp://shawdong@dtn01.nersc.gov/global/cscratch1/sd/shawdong/

gsiftp://shawdong@dtn01.nersc.gov/global/cscratch1/sd/shawdong/

error: globus_ftp_client: the server responded with an error

530 530-Login incorrect. : globus_gss_assist: Gridmap lookup failure: Could not map /DC=gov/DC=nersc/OU=People/CN=Shawfeng Dong 52255 to shawdong

530-

530 End.But Edison works fine:

$ globus-url-copy -list gsiftp://shawdong@edisongrid.nersc.gov/scratch1/scratchdirs/shawdong/

gsiftp://shawdong@edisongrid.nersc.gov/scratch1/scratchdirs/shawdong/

100GB.datLet’s see how fast to transfer a 100GB file from NERSC Edison to venadi:

$ globus-url-copy -vb -fast -p 8 gsiftp://shawdong@edisongrid.nersc.gov/scratch1/scratchdirs/shawdong/100GB.dat file:/bigdata/dong/100GB-gsiftp.dat

Source: gsiftp://shawdong@edisongrid.nersc.gov/scratch1/scratchdirs/shawdong/

Dest: file:/bigdata/dong/

100GB.dat -> 100GB-gsiftp.dat

106992500736 bytes 108.32 MB/sec avg 234.12 MB/sec instThe average speed was only 108.32 MB/sec. But I noticed that the peak speed had reached 290 MB/s. Most likely, the limiting factor was the scratch file system, which is a busy resource shared by thousands of users!

Update: NERSC consul confirmed that my account was not properly set up in NIM for the data transfer nodes. The issue has been fixed! Let’s test again:

[dong@venadi dong]$ export MYPROXY_SERVER_DN="/DC=org/DC=opensciencegrid/O=Open Science Grid/OU=Services/CN=nerscca3.nersc.gov"

[dong@venadi dong]$ myproxy-logon -l shawdong -s nerscca.nersc.gov

[dong@venadi dong]$ globus-url-copy -list gsiftp://shawdong@dtn01.nersc.gov/global/cscratch1/sd/shawdong/

gsiftp://shawdong@dtn01.nersc.gov/global/cscratch1/sd/shawdong/

100GB.dat

[dong@venadi dong]$ globus-url-copy -vb -fast -p 8 gsiftp://shawdong@dtn01.nersc.gov/global/cscratch1/sd/shawdong/100GB.dat file:/bigdata/dong/100GB-gsiftp.dat

Source: gsiftp://shawdong@dtn01.nersc.gov/global/cscratch1/sd/shawdong/

Dest: file:/bigdata/dong/

100GB.dat -> 100GB-gsiftp.dat

85760933888 bytes 164.23 MB/sec avg 222.00 MB/sec instThe sluggish speed is likely due to the performance of Cori's Lustre file system.

Let’s test against Eli Dart’s dataset, which is stored on GPFS:

[dong@venadi dong]$ globus-url-copy -vb -fast -p 8 gsiftp://shawdong@dtn03.nersc.gov/global/project/projectdirs/mpccc/dart/test-data/50G.dat file:/bigdata/dong/50GB-gsiftp.dat

Source: gsiftp://shawdong@dtn03.nersc.gov/global/project/projectdirs/mpccc/dart/test-data/

Dest: file:/bigdata/dong/

50G.dat -> 50GB-gsiftp.dat

49888100352 bytes 1486.77 MB/sec avg 1780.96 MB/sec instWe got a whopping average transfer speed of 1.487 GB/s, with peak speed of almost 2GB/s!

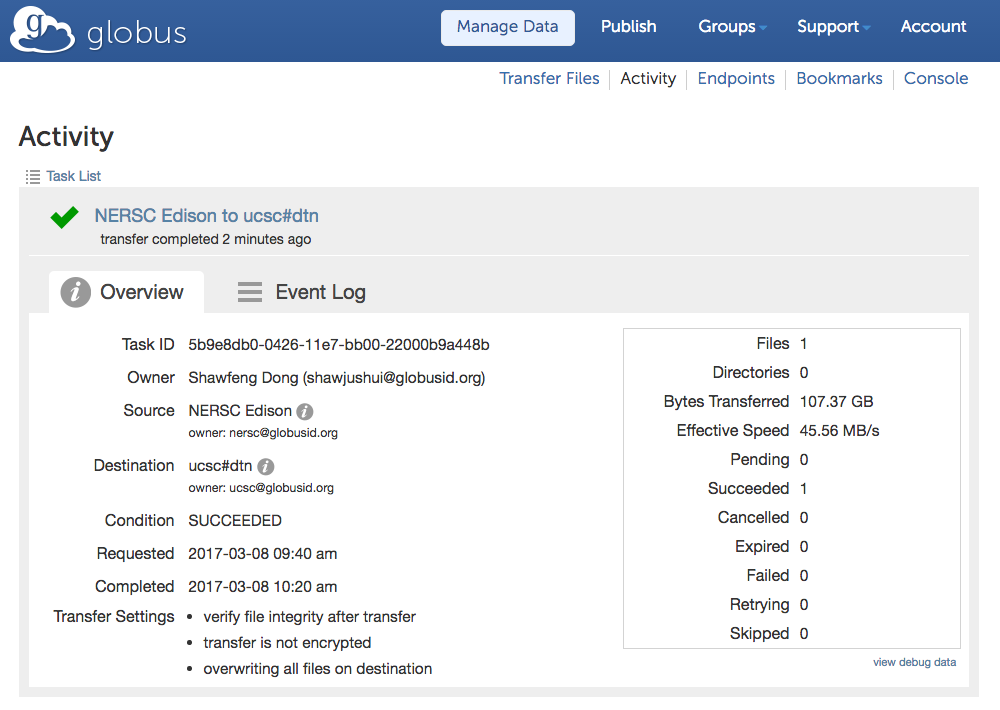

Globus Online

Lastly, let’s use Globus Online to transfer the 100GB file from Edison:

The paltry effective speed of 45.56 MB/s was mostly due to the long time spent in "verifying file integrity after transfer"; the actual transfer speed was about 110 MB/s, same as that of GridFTP. Once again, the sluggish speed is due to the performance of Edison's Lustre file system. Tests against Eli Dart's dataset, which is storage on GPFS, would give much better results.

Quick Remarks

- UCSC’s SciDMZ is in excellent shape! A well-tuned host like venadi can reach line rate of 40GbE in memory-to-memory transfer;

- Local IO performance of FIONA boxes is fast! For small files, we observed a sequential write speed of 4.2 GB/s when using dd, and more with iozone; for big files, we observed a sequential write speed of 1.48 GB/s when using bonnie++.

- We are able to transfer files from NERSC GPFS to venadi at lightning fast disk-to-disk speeds:

- we got consistent transfer speed of 1GB/s using bbcp

- we got almost 1.5 GB/s average transfer speed, using GridFTP/Globus